I had tested the following key-value store for set() and get()

- MemcacheDB, use memcached client protocol.

- Tokyo Tyrant (Tokyo Cabinet), use memcached client protocol

- Redis, use JRedis Java client

1. Test environment

1.1 Hardware/OS

2 Linux boxes in a LAN, 1 server and 1 test client

Linux Centos 5.2 64bit

Intel(R) Xeon(R) CPU E5410 @ 2.33GHz (L2 cache: 6M), Quad-Core * 2

8G memory

SCSI disk (standalone disk, no other access)

1.2 Software version

db-4.7.25.tar.gz

libevent-1.4.11-stable.tar.gz

memcached-1.2.8.tar.gz

memcachedb-1.2.1-beta.tar.gz

redis-0.900_2.tar.gz

tokyocabinet-1.4.9.tar.gz

tokyotyrant-1.1.9.tar.gz

1.3 Configuration

Memcachedb startup parameter

Test 100 bytes

./memcachedb -H /data5/kvtest/bdb/data -d -p 11212 -m 2048 -N -L 8192

(Update: As mentioned by Steve, the 100-byte-test missed the -N paramter, so I added it and updated the data)

Test 20k bytes

./memcachedb -H /data5/kvtest/mcdb/data -d -p 11212 -b 21000 -N -m 2048

Tokyo Tyrant (Tokyo Cabinet) configuration

Use default Tokyo Tyrant sbin/ttservctl

use .tch database, hashtable database

ulimsiz=”256m”

sid=1

dbname=”$basedir/casket.tch#bnum=50000000″ # default 1M is not enough!

maxcon=”65536″

retval=0

Redis configuration

timeout 300

save 900 1

save 300 10

save 60 10000

# no maxmemory settings

1.4 Test client

Client in Java, JDK1.6.0, 16 threads

Use Memcached client java_memcached-release_2.0.1.jar

JRedis client for Redis test, another JDBC-Redis has poor performance.

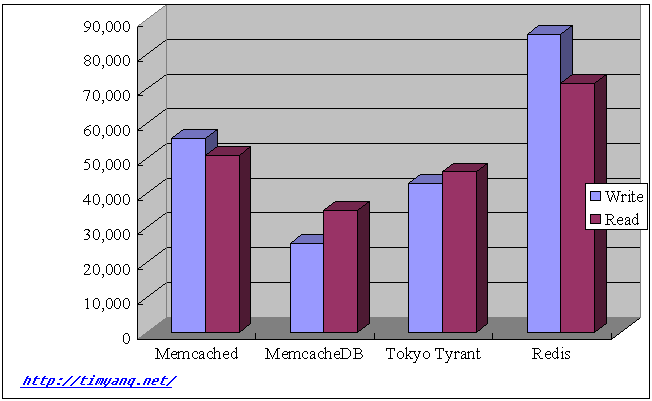

2. Small data size test result

Test 1, 1-5,000,000 as key, 100 bytes string value, do set, then get test, all get test has result.

Request per second(mean)

| Store | Write | Read |

| Memcached | 55,989 | 50,974 |

| Memcachedb | 25,583 | 35,260 |

| Tokyo Tyrant | 42,988 | 46,238 |

| Redis | 85,765 | 71,708 |

Server Load Average

| Store | Write | Read |

| Memcached | 1.80, 1.53, 0.87 | 1.17, 1.16, 0.83 |

| MemcacheDB | 1.44, 0.93, 0.64 | 4.35, 1.94, 1.05 |

| Tokyo Tyrant | 3.70, 1.71, 1.14 | 2.98, 1.81, 1.26 |

| Redis | 1.06, 0.32, 0.18 | 1.56, 1.00, 0.54 |

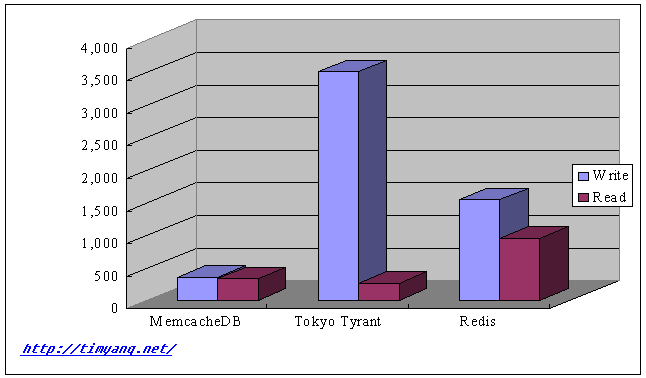

3. Larger data size test result

Test 2, 1-500,000 as key, 20k bytes string value, do set, then get test, all get test has result.

Request per second(mean)

(Aug 13 Update: fixed a bug on get() that read non-exist key)

| Store | Write | Read |

| Memcachedb | 357 | 327 |

| Tokyo Tyrant | 3,501 | 257 |

| Redis | 1,542 | 957 |

4. Some notes about the test

When test Redis server, the memory goes up steadily, consumed all 8G and then use swap(and write speed slow down), after all memory and swap space is used, the client will get exceptions. So use Redis in a productive environment should limit to a small data size. It is another cache solution rather than a persistent storage. So compare Redis together with MemcacheDB/TC may not fair because Redis actually does not save data to disk during the test.

Tokyo cabinet and memcachedb are very stable during heavy load, use very little memory in set test and less than physical memory in get test.

MemcacheDB peformance is poor for write large data size(20k).

The call response time was not monitored in this test.

这次的测试结果显然更新了,虽然还是不敌,不过这次 TT/TC write 的性能显然提高了。 😀

very interesting, thanks!

good info. One thing missing in these types of comparisons is a baseline against an RDB. Adding postgres 8.4 to this comparison might help.

You should really test memcached 1.4.0, which actually can use multi-cores.

Thanks for the info,

Can you please detail the ratio of read / write operations and the order of the operations?

did you first write all the data than read all the data or was it intermittent?

[…] MemcacheDB, Tokyo Tyrant, Redis performance test – Tim[后端技术]timyang.net […]

[…] Tokyo Tyrant, Redis performance Test http://timyang.net/data/mcdb-tt-redis/ […]

Caching Backends…

…

@asaf

I do read test after finish all writes.

Write test:

key: 1-5,000,000

value: 100-byte-string

After write all, then do read test

client.get(random(5,000,000));

This benchmark is very helpful to me in evaluating those K/V stores. Thanks a lot.

Very interesting result. Any significant cpu usage difference between those stores?

Hi Tim,

Thanks for the test! Just curious:

1)

Try changing the tcp buffer sizes in class org.jredis.ri.alphazero.connection.ConnectionBase$DefaultConnectionBase. On my mac, the default values are always fairly quite large so perhaps this is something that was missed:

change the tcp rcv and snd buffer size values:

public static final class DefaultConnectionSpec implements ConnectionSpec {

…

/** */

private static final int DEFAULT_RCV_BUFF_SIZE = 1024 * 48; // << increased

/** */

private static final int DEFAULT_SND_BUFF_SIZE = 1024 * 48; // << increased

…

2) May also try changing the tcp socket prefs (same inner class):

Try this:

public static final class DefaultConnectionSpec implements ConnectionSpec {

…

@Override

public Integer getSocketProperty(SocketProperty property) {

int value = 0;

switch (property){

case SO_PREF_BANDWIDTH:

value = 0; // changed

break;

case SO_PREF_CONN_TIME:

value = 2;

break;

case SO_PREF_LATENCY:

value = 1; // changed

break;

case SO_RCVBUF:

value = DEFAULT_RCV_BUFF_SIZE;

break;

case SO_SNDBUF:

value = DEFAULT_SND_BUFF_SIZE;

break;

case SO_TIMEOUT:

value = DEFAULT_READ_TIMEOUT_MSEC;

break;

}

return value;

}

…

}

I assume you’ve downloaded the full distribution from github. “mvn install” to build and run the test again. Curious to see if it makes any diffs. That fails we’ll try switching to buffered io streams.

/R

Can you post the actual model# for the Xeon CPU along with operating frequency?

[…] MS can’t sell Word anymore RealNetworks’ RealDVD barred from market Facebook buys Friendfeed VMWare buys SpringSource Working from home, better Palm Pre privacy problems MemcacheDB, Tokyo Tyrant, Redis performance test […]

@Joubin

I’ve changed DEFAULT_RCV_BUFF_SIZE/DEFAULT_SND_BUFF_SIZE from 48k to 256k, both for small data size and larger data size, but there is not significant improvement. The bottleneck here may not on the client side.

@Gabriel added.

[…] MemcacheDB, Tokyo Tyrant, Redis performance test […]

hi Tim

very nice!

can you add mongodb to the benchmark? I would love to see how it performs against redis

[…] MemcacheDB, Tokyo Tyrant, Redis performance test […]

Tim,

Thanks. That confirms my owns tests that stressed on just one key to isolate the client overhead.

A cursory look at the TT’s Java client seems to indicate that payloads are gzip’d (which JRedis does not, given that the baseline assumption is that you may be using a variety of clients for your db, in which case client specific optimizations are not viable unless the compression algorithm is shared by all client types.)

I’ll update when I have something to add here, but safe assumption here is that compressing the 20K payload has a significant positive impact on the performance of the TT setup.

redis有没有象memcached这样的过期时间设置?

I assume this tests write once?

You may see something interesting from Tokyo Tyrant if you test modification in random order. Write performance should drop to match read performance.

Thanks for the Test, we will test Redis in one of the next Projects

To match Redis’s durability (“D” in ACID) using Berkeley DB simply set the DB_TXN flags to DB_TXN_NOSYNC. A transaction will be consider “durable” when the data is flushed from the log-buffers in-memory to the operating system’s filesystem buffers rather than waiting for the disk to actually write the data (which is much slower). There are many other configuration parameters that can be tuned, you don’t list them in your description so I can’t say if they were optimal or not (really, “auto-tuning” a database is something that BDB should do for you, but we’re not there yet). If these tests run entirely in cache (can load the entire dataset into memory) then this isn’t a realistic scenario, increase the dataset until the data is 10x the cache size. Also, as someone mentioned you may want to test under a highly concurrent, and highly contentious workload as that will push the locking systems and create very different results. Finally, update to Berkeley DB 4.8. In 4.8 we’ve dramatically improved our locking subsystem for modern multi-core CPU architectures.

Benchmarks are always tricky. 🙂 Apples-to-apples tests are rare because they are hard to design and harder to run. There is almost always some degree of bias or some overlooked element. It’s unavoidable. That said, benchmarks do serve a purpose so thank you for doing the tests. We hope that you do more. We’re always looking for ways to improve performance.

-greg

Berkeley DB Product Manager, Oracle Corp

Greg, already enable DB_TXN_NOSYNC by add -N to memcachedb in this test.

Will evaluated bdb 4.8

Greg, tested bdb 4.8, there is significant improvent. Great!

but after I compare with TC(tokyocabinet-1.4.39.tar.gz) and TT(tokyotyrant-1.1.37.tar.gz) new version, they also had improvement, so the result doesn’t changed much.

FYI, I’ve tested read/write 100 byte with 50 threads, the request per second result are,

Tokyo Tyrant(Tokyo Cabinet) Write 54,189, Read 73,384

Memcachedb(Berkekey DB 4.8) Write 32,985 Read 59,178

Memcached Write 103,242, Read 106,102

Because the new test donen’t change the situation, the test in blog post still make sense.

[…] 这个是Tim Yang做的一个Memcached,Redis和Tokyo Tyrant的简单的性能评测,仅供参考 […]

感觉还是不错。

[…] 这个是Tim Yang做的一个Memcached,Redis和Tokyo Tyrant的简单的性能评测,仅供参考 […]

[…] 这个是Tim Yang做的一个Memcached,Redis和Tokyo Tyrant的简单的性能评测,仅供参考 […]

[…] 这个是Tim Yang做的一个Memcached,Redis和Tokyo Tyrant的简单的性能评测,仅供参考 […]