大型软件系统开发需要模块化,在分布式系统中,模块化通常是将功能分成不同的远程服务(RPC)来实现。比如可以用Java RMI、Web Service、Facebook开源的Thrift等一些技术。同样,在一个大型系统中,服务化之后服务的可维护、可管理、可监控以及高可用、负载均衡等因素同服务本身同样重要。

服务管理目前并无直接解决方案,Thrift作者Mark Slee提到

It’s also possible to use Thrift to actually build a services management tool. i.e. have a central Thrift service that can be queried to find out information about which hosts are running which services. We have done this internally, and would share more details or open source it, but it’s a bit too particular to the way our network is set up and how we cache data. The gist of it, though, is that you have a highly available meta-service that you use to configure your actual application server/clients.

Source: [Thrift] Handling failover and high availability with thriftservices

如果开发一个自己的服务管理框架,需要具备以下功能

- 快速失败,这个在本厂意义重大,很多远程服务调用是在关键路径中,它可以容忍失败,但是不能容忍堵塞

- failover,客户端failover支持,并支持自动失效探测及恢复调用

- 中心化配置及推送功能,所有client在同一时刻配置的一致性,并且client会跟配置中心保持长连

- 负载均衡策略:支持round robin,least active, consistent hash,或者基于脚本的动态路由策略。这个都是由配置中心来控制

- 动态启用及停用服务及节点:可以动态启动及停用服务(热发布),由于有推送功能,相对容易实现

- 跨语言:支持client能使用常见主流语言来访问

- 版本管理:同一服务可以有不同的版本并存

- 访问统计及动态运行参数查看:可以对方法级别进行访问统计及实时观察

访问策略

服务框架倾向于直连的方案,即client是直接连接server,而不会增加中间物理上的代理层,服务框架只做中心配置、访问策略、服务发现、配置通知等职责。

路由的特殊需求

通常的服务访问,使用上述round robin等3种策略即可,但是在实际工程实践中,我们发现有些不同的需求。比如计数这样的远程服务,读操作可随机访问一台远程节点,但写操作需要访问所有的服务节点才能实现。因此我们需要有广播式的访问需求。由于计数服务对实时性和一致性要求较高,不适合采用异步如Pub/Sub这样方式去实现,因此在client还需要支持同步的广播调用。

耦合及侵入的矛盾

在设计服务管理系统之前,我们希望不跟一种具体的技术(如Thrift)绑定,比如client和server服务实现方不需要太多关心底层技术。但是在实际实现过程中碰到不少矛盾。

IDL侵入

在使用Thrift之后服务实现很难绕过Thrift IDL,使用方需要自己维护IDL以及Thrift生成的代码,服务框架支持将Thrift服务注册到配置系统中。虽然也可以绕过IDL来实现服务,但是框架相关功能的实现和维护成本比较高。

RPC框架的侵入

Thrift Transport可以使用TCP(Socket)或者是HTTP

这个也是非常好的特性,在某些情况Transport使用HTTP会带来很多便利,使用HTTP虽然有一些额外开销,但是HTTP的周边配套设施的完善足够抵消这种开销。使用TCP很多状态实时监控都需要服务系统从头做起。

Thrift的Version与服务的version存在一定的重复

服务牵涉到版本管理,我们希望通过发现服务来管理,但是Thrift本身也有版本的设计。

这些矛盾的本质就是服务框架需要的一些功能是自己实现还是依赖Thrift来实现,很多Thrift使用方如Twitter rpc-client干脆就直接在Thrift框架基础上增强。

虽然存在上述一些待解决问题,厂内第一个使用服务框架管理的服务即将上线,很快每天会有数十亿的调用将会在此之上产生,同时也会有新的挑战出现。

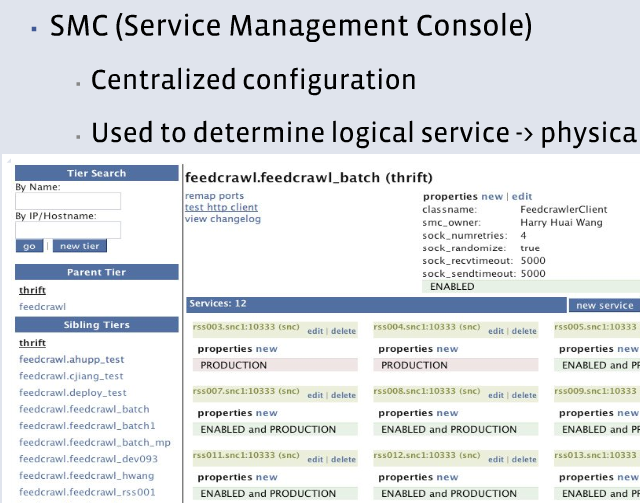

Figure 1: Facebook Service Management Console

(来源:http://www.slideshare.net/adityaagarwal/qcon Slide 27)