I had tested a hello world web server in C, Erlang, Java and the Go programming language.

* C, use the well-known high performance web server nginx, with a hello world nginx module

* Erlang/OTP

* Java, using the MINA 2.0 framework, now the JBoss Netty framework.

* Go, http://golang.org/

1. Test environment

1.1 Hardware/OS

2 Linux boxes in a gigabit ethernet LAN, 1 server and 1 test client

Linux Centos 5.2 64bit

Intel(R) Xeon(R) CPU E5410 @ 2.33GHz (L2 cache: 6M), Quad-Core * 2

8G memory

SCSI disk (standalone disk, no other access)

1.2 Software version

nginx, nginx-0.7.63.tar.gz

Erlang, otp_src_R13B02-1.tar.gz

Java, jdk-6u17-linux-x64.bin, mina-2.0.0-RC1.tar.gz, netty-3.2.0.ALPHA1-dist.tar.bz2

Go, hg clone -r release https://go.googlecode.com/hg/ $GOROOT (Nov 12, 2009)

1.3 Source code and configuration

Linux, run sysctl -p

net.ipv4.ip_forward = 0 net.ipv4.conf.default.rp_filter = 1 net.ipv4.conf.default.accept_source_route = 0 kernel.sysrq = 0 kernel.core_uses_pid = 1 net.ipv4.tcp_syncookies = 1 kernel.msgmnb = 65536 kernel.msgmax = 65536 kernel.shmmax = 68719476736 kernel.shmall = 4294967296 kernel.panic = 1 net.ipv4.tcp_rmem = 8192 873800 8738000 net.ipv4.tcp_wmem = 4096 655360 6553600 net.ipv4.ip_local_port_range = 1024 65000 net.core.rmem_max = 16777216 net.core.wmem_max = 16777216

# ulimit -n

150000

C: ngnix hello world module, copy the code ngx_http_hello_module.c from https://timyang.net/web/nginx-module/

in nginx.conf, set “worker_processes 1; worker_connections 10240” for 1 cpu test, set “worker_processes 4; worker_connections 2048” for multi-core cpu test. Turn off all access or debug log in nginx.conf, as follows

worker_processes 1;

worker_rlimit_nofile 10240;

events {

worker_connections 10240;

}

http {

include mime.types;

default_type application/octet-stream;

sendfile on;

keepalive_timeout 0;

server {

listen 8080;

server_name localhost;

location / {

root html;

index index.html index.htm;

}

location /hello {

ngx_hello_module;

hello 1234;

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root html;

}

}

}

$ taskset -c 1 ./nginx or $ taskset -c 1-7 ./nginx

Erlang hello world server

The source code is available at yufeng’s blog, see http://blog.yufeng.info/archives/105

Just copy the code after “cat ehttpd.erl”, and compile it.

$ erlc ehttpd.erl

$ taskset -c 1 erl +K true +h 99999 +P 99999 -smp enable +S 2:1 -s ehttpd

$ taskset -c 1-7 erl +K true -s ehttpd

We use taskset to limit erlang vm to use only 1 CPU/core or use all CPU cores. The 2nd line is run in single CPU mode, and the 3rd line is run in multi-core CPU mode.

Java source code, save the 2 class as HttpServer.java and HttpProtocolHandler.java, and do necessary import.

public class HttpServer {

public static void main(String[] args) throws Exception {

SocketAcceptor acceptor = new NioSocketAcceptor(4);

acceptor.setReuseAddress( true );

int port = 8080;

String hostname = null;

if (args.length > 1) {

hostname = args[0];

port = Integer.parseInt(args[1]);

}

// Bind

acceptor.setHandler(new HttpProtocolHandler());

if (hostname != null)

acceptor.bind(new InetSocketAddress(hostname, port));

else

acceptor.bind(new InetSocketAddress(port));

System.out.println("Listening on port " + port);

Thread.currentThread().join();

}

}

public class HttpProtocolHandler extends IoHandlerAdapter {

public void sessionCreated(IoSession session) {

session.getConfig().setIdleTime(IdleStatus.BOTH_IDLE, 10);

session.setAttribute(SslFilter.USE_NOTIFICATION);

}

public void sessionClosed(IoSession session) throws Exception {}

public void sessionOpened(IoSession session) throws Exception {}

public void sessionIdle(IoSession session, IdleStatus status) {}

public void exceptionCaught(IoSession session, Throwable cause) {

session.close(true);

}

static IoBuffer RESULT = null;

public static String HTTP_200 = "HTTP/1.1 200 OK\r\nContent-Length: 13\r\n\r\n" +

"hello world\r\n";

static {

RESULT = IoBuffer.allocate(32).setAutoExpand(true);

RESULT.put(HTTP_200.getBytes());

RESULT.flip();

}

public void messageReceived(IoSession session, Object message)

throws Exception {

if (message instanceof IoBuffer) {

IoBuffer buf = (IoBuffer) message;

int c = buf.get();

if (c == 'G' || c == 'g') {

session.write(RESULT.duplicate());

}

session.close(false);

}

}

}

Nov 24 update Because the above Mina code doesn’t parse HTTP request and handle the necessary HTTP protocol, replaced with org.jboss.netty.example.http.snoop.HttpServer from Netty example, but removed all the string builder code from HttpRequestHandler.messageReceived() and just return a “hello world” result in HttpRequestHandler.writeResponse(). Please read the source code and the Netty documentation for more information.

$ taskset -c 1-7 \

java -server -Xmx1024m -Xms1024m -XX:+UseConcMarkSweepGC -classpath . test.HttpServer 192.168.10.1 8080

We use taskset to limit java only use cpu1-7, and not use cpu0, because we want cpu0 dedicate for system call(Linux use CPU0 for network interruptions).

Go language, source code

package main

import (

"http";

"io";

)

func HelloServer(c *http.Conn, req *http.Request) {

io.WriteString(c, "hello, world!\n");

}

func main() {

runtime.GOMAXPROCS(8); // 8 cores

http.Handle("/", http.HandlerFunc(HelloServer));

err := http.ListenAndServe(":8080", nil);

if err != nil {

panic("ListenAndServe: ", err.String())

}

}

$ 6g httpd2.go

$ 6l httpd2.6

$ taskset -c 1-7 ./6.out

1.4 Performance test client

ApacheBench client, for 30, 100, 1,000, 5,000 concurrent threads

ab -c 30 -n 1000000 http://192.168.10.1:8080/

ab -c 100 -n 1000000 http://192.168.10.1:8080/

1000 thread, 334 from 3 different machine

ab -c 334 -n 334000 http://192.168.10.1:8080/

5000 thread, 1667 from 3 different machine

ab -c 1667 -n 334000 http://192.168.10.1:8080/

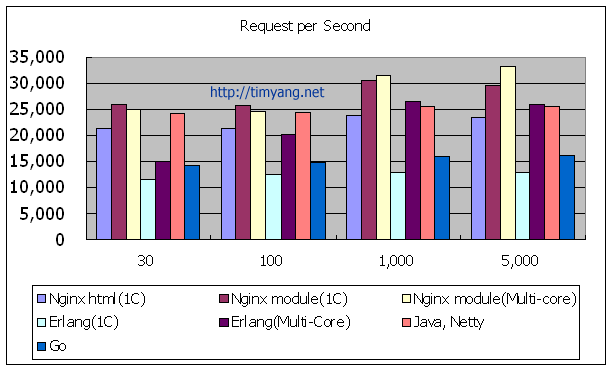

2. Test results

2.1 request per second

| 30 (threads) | 100 | 1,000 | 5,000 | |

| Nginx html(1C) | 21,301 | 21,331 | 23,746 | 23,502 |

| Nginx module(1C) | 25,809 | 25,735 | 30,380 | 29,667 |

| Nginx module(Multi-core) | 25,057 | 24,507 | 31,544 | 33,274 |

| Erlang(1C) | 11,585 | 12,367 | 12,852 | 12,815 |

| Erlang(Multi-Core) | 15,101 | 20,255 | 26,468 | 25,865 |

| Java, Mina2(without HTTP parse) |

30,631 | 26,846 | 31,911 | 31,653 |

| Java, Netty | 24,152 | 24,423 | 25,487 | 25,521 |

| Go | 14,080 | 14,748 | 15,799 | 16,110 |

2.2 latency, 99% requests within(ms)

| 30 | 100 | 1,000 | 5,000 | |

| Nginx html(1C) | 1 | 4 | 42 | 3,079 |

| Nginx module(1C) | 1 | 4 | 32 | 3,047 |

| Nginx module(Multi-core) | 1 | 6 | 205 | 3,036 |

| Erlang(1C) | 3 | 8 | 629 | 6,337 |

| Erlang(Multi-Core) | 2 | 7 | 223 | 3,084 |

| Java, Netty | 1 | 3 | 3 | 3,084 |

| Go | 26 | 33 | 47 | 9,005 |

3. Notes

* On large concurrent connections, C, Erlang, Java no big difference on their performance, results are very close.

* Java runs better on small connections, but the code in this test doesn’t parse the HTTP request header (the MINA code).

* Although Mr. Yu Feng (the Erlang guru in China) mentioned that Erlang performance better on single CPU(prevent context switch), but the result tells that Erlang has big latency(> 1S) under 1,000 or 5,000 connections.

* Go language is very close to Erlang, but still not good under heavy load (5,000 threads)

After redo 1,000 and 5,000 tests on Nov 18

* Nginx module is the winner on 5,000 concurrent requests.

* Although there is improvement space for Go, Go has the same performance from 30-5,000 threads.

* Erlang process is impressive on large concurrent request, still as good as nginx (5,000 threads).

4. Update Log

Nov 12, change nginx.conf work_connections from 1024 to 10240

Nov 13, add runtime.GOMAXPROCS(8); to go’s code, add sysctl -p env

Nov 18, realized that ApacheBench itself is a bottleneck under 1,000 or 5,000 threads, so use 3 clients from 3 different machines to redo all tests of 1,000 and 5,000 concurrent tests.

Nov 24, use Netty with full HTTP implementation to replace Mina 2 for the Java web server. Still very fast and low latency after added HTTP handle code.

[…] で、注目の結果がこちら。やはり、Nginxは速いが、mina2がそれを押さえてトップの模様。そして、Goの簡易webサーバ(上のものとほぼ同じ)もさほど差のないパフォーマンスを出している(・・一桁遅いとかいうことはないレベル)。生まれたてのGo言語ではあるが、今後が非常に楽しみとなる(まずは、自らの手でパフォーマンスを検証したい)。 […]

[…] A performance comparison […]

[…] 11 Nov 09 – Tim did a web server performance test between C, Erlang, Java and Go. Result in a comprehensive graph […]

[…] 11 Nov 09 – Tim did a web server performance test between C, Erlang, Java and Go. Result in a comprehensive graph […]

Any people know why at 1000 threads, Erlang(Multi-Core) is faster than java, a little bit; but in 5000 threads, java win a little bit again??

[…] rapidement, plusieurs opinions et résultats d’expérimentations sont apparus. Ainsi, Tim Yang montre les performances obtenues par une application serveur développée en Java (en utilisant Mina), C (avec Nginx) et […]

Carfield, please ingore the deviation if it is < 3%, if you run an independent test several times, every time you get different result but always < 3%, we can think they have same performance.

to Koala Yeung:

go的测试用到了epoll和goroutine.

to Koala Yeung:

gccgo的goroutine还是thread实现 和6g的轻量级线程差很多。 gccgo还很不完善,目前能用的还是6g 6l系列。

to Carfield Yim:

due to erlang fair process scheduler, the cost and latency increase when >5000 threads. In this situation, enable hipe helps much.

It would be great to see the same test done in an open load model, i.e. performance at certain arrival rates not current clients. A good tool for such a test is Tsung http://tsung.erlang-projects.org/

It is also good to list the underlying IO libs each of the implementations uses.

Another good Java candidate to be compared is Grizzly https://grizzly.dev.java.net/

You can easily get a hello world server by modifying the code at http://weblogs.java.net/blog/jfarcand/archive/2008/07/extending_the_g.html

@yufeng there is no difference after enable hipe in my environment, with

erlc +native +”{hipe, [o3]}” ehttpd.erl

100, 5000 下比较过,测试结果基本上一样。

I’d like to suggest to use Netty 3 instead of MINA 2 for Java, since it is known to perform better, and more actively maintained: http://www.jboss.org/netty/

You will also find some information about Netty’s HTTP performance in the ‘Testimonials’ and ‘Performance’ page.

There’s a simple HTTP server example in the ‘Documentation’ page, so it should not be very difficult to write a hello world web server quickly. Please feel free to contact me if you have any problem.

Trustin, thanks for the information, already replaced Mina with Netty in this test. Please update the page for the latest result.

Cool, now the numbers are more reasonable 🙂

Oh, forget to mention, nginx is a full-featured HTTP server, so it will send out more headers than some other servers above, e.g.:

$ curl -i http://localhost/

HTTP/1.1 200 OK

Server: nginx/0.7.63

Date: Wed, 25 Nov 2009 02:07:56 GMT

Content-Type: text/html

Content-Length: 13

Connection: keep-alive

Hello, world

rath의 생각…

C(Nginx), Erlang(OTP), Java(Mina), Google Go 헬로월드 웹서버 벤치마크 테스트 to 꽃띠앙님….

Thanks for the update and the number looks pretty nice! 🙂

By the way, in “3. Notes”, “* Java runs better on small connections, but the code in this test doesn’t parse the HTTP request header.” now seems invalid? Also, I would replace the MINA source code from the page with the full Netty code since the test result has been replaced.

on erlanh -h 99999 seems overkill. A far lower value can is better as every process does very little porcessing so no much heap is used.

You hit GC when the process dies son you are GCing 99999 that a lot of memory and is mainly unused.

Try normal setup o a very low value as a 512 or 1024.

What you are testing? Socket performance? It’s roughly the same.

There is no point in such type of tests. Write normal dynamic application (just wondering how you’ll do it with pure nginx 😉 ) and compare everything – development time, support, load, …

All languages will be very close printing “Hello, World”:

printf(“Hello, World”);

print “Hello, World”

or

Console.WriteLine(“Hello, World”);

To D:

Every http connection in this test has its own session, the program maintains all sessions and concurrent logic, not just “Socket performance”, after adding a little session state or logic code, it will become a real business server.

if you compare these results with a Ruby/PHP… there will be different, and if your own server need to handle 5,000+ concurrent connection, this test may informative for you.

To Tim:

May be. Sorry, I haven’t checked the source code.

But still not sure in this type of tests. I mean, I can’t see how useful they can be for someone who wants to create next Google. Very different languages can give you success: Facebook chat uses Erlang, Twitter was using Ruby, StackOverflow is written in C#, Google is using Python, eBay uses Java, …

[…] C, Erlang, Java and Go Web Server performance test […]

why not compare a pure cpp project called ‘poco’ too, it also provide a net framework and http server sample as http://pocoproject.org/wiki/index.php/Tutorialftpclient

maybe these files can help scale the GO language performance, but i know fea about the GO, so i am not sure.

/usr/src/linux-2.6.30/robust-futexes.txt

rt-mutex.txt

pi-futex.txt

robust-futex-ABI.txt

[…] 已经很好的做了一个关于C(nginx)、Erlang、Java 和 Go 的测试:http://timyang.net/programming/c-erlang-java-performance/ 测试很完整,不过后面的 comments […]

You get very strange results for NGINX. I write custom module for nginx with some business logic (3 select to TimesTen DB). I use for tests HP Proliant BL460c G1

blade with 2 4 core CPU

/proc/cpuinfo

model name : Intel(R) Xeon(R) CPU E5345 @ 2.33GHz

stepping : 8

cpu MHz : 2327.203

cache size : 4096 KB

I get ~ 80 000 request per second at ab -c 100.

#uname -a

Linux timesten1.testik.com 2.6.18-92.el5 #1 SMP Tue Apr 29 13:16:15 EDT 2008 x86_64 x86_64 x86_64 GNU/Linux

vromanov,

did you use the ab -k (keepalive) option? I think it is hard to reach 80k rps without -k.

yes! I use -k

This is my string

ab -k -c 100 -n 20000000 “${URL}” >> ${LOG}

The Go GC probably accounts for much of the difference here. The Go garbage collector, by the language designers own admission, needs some improvements.

You should easily see 20k+ requests/sec on a quad core cpu with the garbage collector turned off. While running the test with the GC off is not really valid, it does give a clear indication where there is *lots* of room for improvement and does give a hint of what the language ought to be capable off given time to mature.

You could test this with:

export GOGC=off && taskset -c 1-7 ./6.out

I guess you’ll need somewhere between 1-2Gb of system RAM with the GC turned off.

I hope you include node.js in the next version of this fantastically useful performance test.